How To Upload A Website Using Google Search Console For Seo

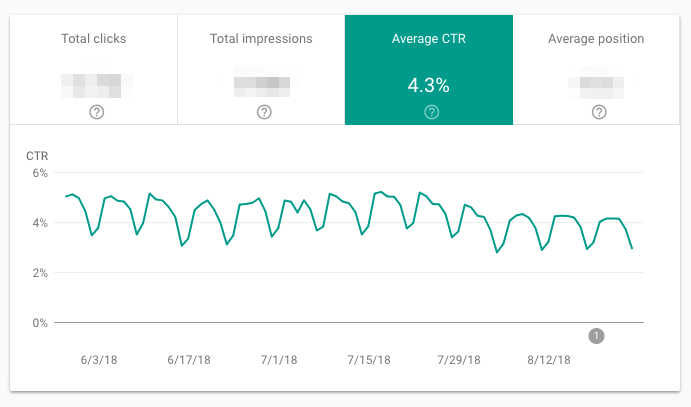

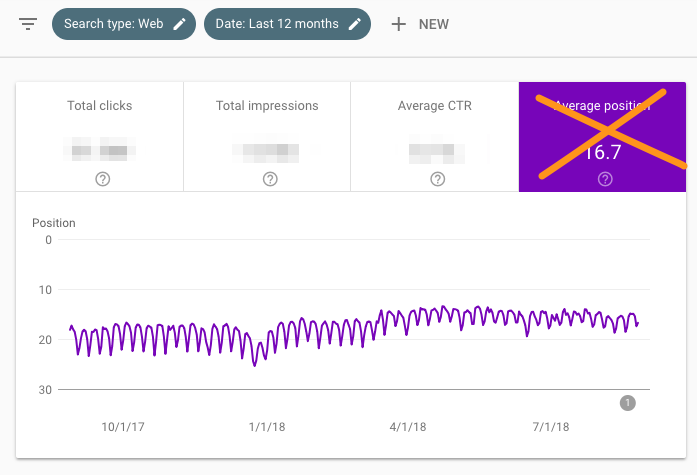

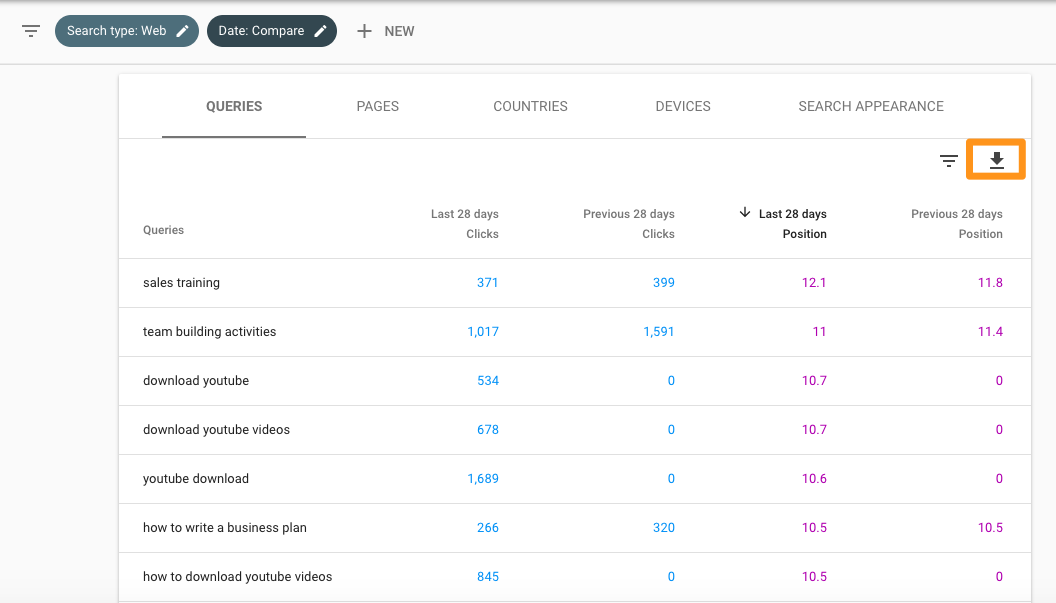

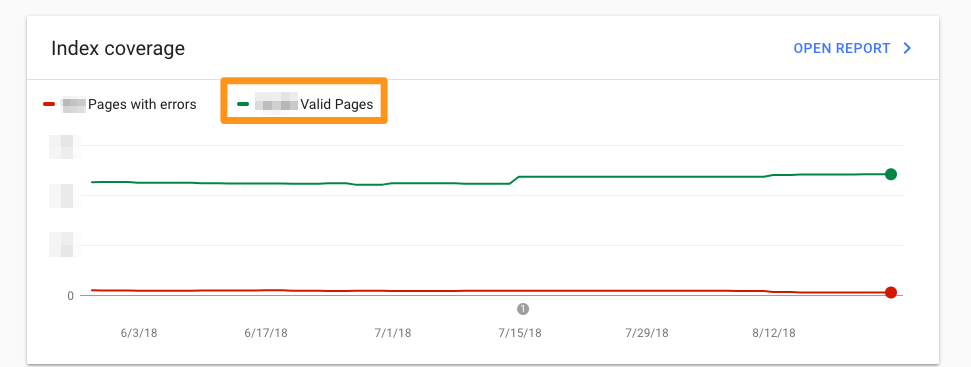

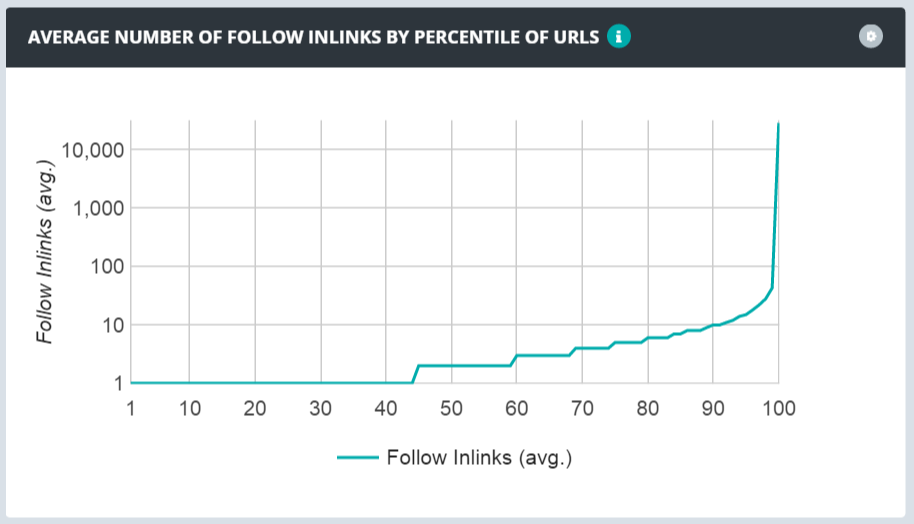

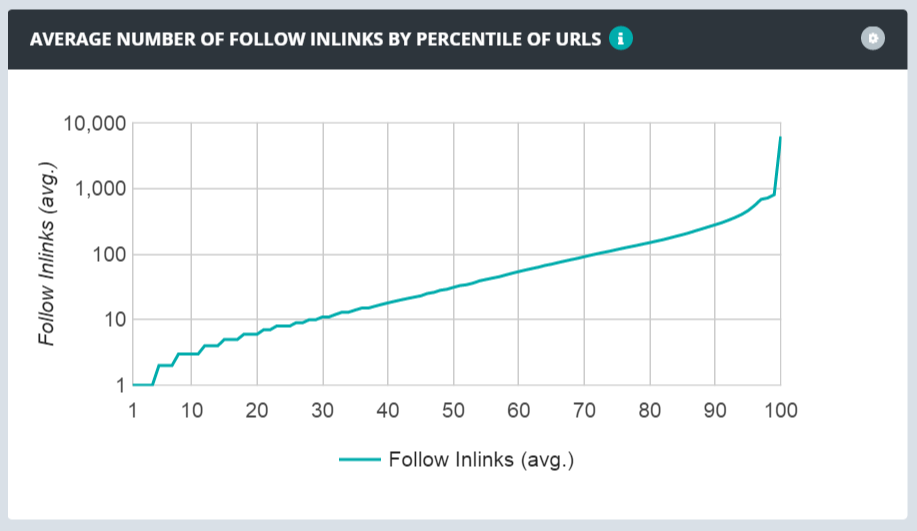

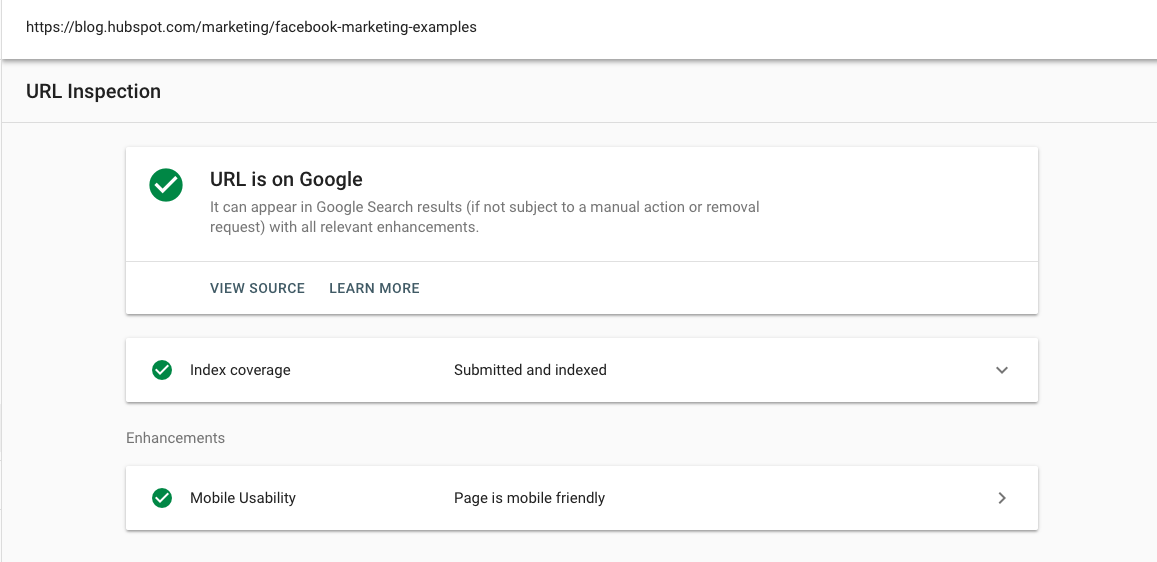

Google Search Console (formerly Google Webmaster Tools) is a gratuitous platform for anyone with a website to monitor how Google views their site and optimize its organic presence. That includes viewing your referring domains, mobile site functioning, rich search results, and highest-traffic queries and pages. At any given time, I have GSC open in 2 to 10 tabs. It'south helpful on a macro and micro level -- both when I need to see how many impressions HubSpot is gaining month over calendar month or figure out what'southward happened to a high-traffic weblog postal service that suddenly fell. I'm a content strategist on HubSpot's SEO team, which means GSC is peculiarly useful to me. But anyone who's got a website tin can and should dip their toes in these waters. Co-ordinate to Google, whether you're a business organization owner, SEO specialist, marketer, site ambassador, spider web developer, or app creator, Search Console will come up in handy. I remember the outset time I opened GSC -- and it was overwhelming. There were tons of labels I didn't understand (index coverage?!?), subconscious filters, and disruptive graphs. Of class, the more I used it, the less disruptive information technology became. But if you want to skip the learning curve (and why wouldn't you), good news: I'm going to reveal everything I've learned well-nigh how to apply Google Search Console similar a pro. This guide covers: First things start. If y'all haven't already signed up for GSC, it'due south time to do then. Google starts tracking data for your holding equally soon as you add it to GSC -- even before information technology's verified you're the site possessor. Because GSC gives yous access to confidential information nigh a site or app'southward performance (plus influence over how Google crawls that site or app!), you lot have to verify yous own that site or app first. Verification gives a specific user control over a specific property. You must have at least 1 verified owner per GSC property. Also, note that verifying your property doesn't affect PageRank or its performance in Google search. Of grade, you can employ GSC data to strategize how to rank college -- only simply calculation your website to GSC won't automatically make your rankings go upwardly. Google-hosted sites, including Blogger and Sites pages, are automatically verified. True or simulated: hubspot.com and www.hubspot.com are the same domain. The answer? False! Each domain represents a different server; those URLs might wait very similar, simply from a technical perspective, they're ii unique domains. However, if you type "hubspot.com" into your browser bar, you'll country at "www.hubspot.com". What is this sorcery? HubSpot has chosen "world wide web.hubspot.com" as its preferred, or canonical, domain. That means nosotros've told Google nosotros desire all of our URLs displayed in search as "www.hubspot.com/……". And when third parties link to our pages, those URLs should be treated as "www.hubspot.com/……" as well. If you don't tell GSC which domain you adopt, Google may treat the www and not-www versions of your domain every bit split up -- splitting all those page views, backlinks, and engagement into two. Non proficient. (At this time y'all should besides prepare a 301 redirect from your non-preferred domain to your preferred ane, if yous haven't already.) At that place are two GSC part-types. I know y'all might be itching to become to the good stuff (cough the data) just it's important to do this correct. Think carefully near who should have which permissions. Giving everyone full buying could be disastrous -- you lot don't want someone to accidentally change an important setting. Try to give your team members merely every bit much authorization every bit they need and no farther. For instance, at HubSpot, our technical SEO manager Victor Pan is a verified owner. I'thousand an SEO content strategist, which means I use GSC heavily just don't need to change any settings, and then I'm a delegated owner. The members of our blogging team, who use GSC to clarify blog and post performance, are total users. Here are detailed instructions on calculation and removing owners and users in Search Console. At that place's a third role: an associate. You tin can associate a Google Analytics holding with a Search Panel account -- which will let yous see GSC information in GA reports. Y'all can as well access GA reports in ii sections of Search Console: links to your site, and Sitelinks. A GA property tin can only be associated with one GSC site, and vice versa. If yous're an owner of the GA property, follow these instructions to acquaintance it with the GSC site. A sitemap isn't necessary to show up in Google search results. As long as your site is organized correctly (meaning pages are logically linked to each other) , Google says its web crawlers will normally observe most of your pages. But in that location are four situations a sitemap will improve your site's crawlability: One time yous've built your sitemap, submit it using the GSC sitemaps tool. Afterwards Google has candy and indexed your sitemap, it will appear in the Sitemaps report. You lot'll be able to see when Google last read your sitemap and how many URLs information technology's indexed. There are a few terms you should understand before using GSC. This is a search term that generated impressions of your site page on a Google SERP. You can only find query data in Search Console, not Google Analytics. Each time a link URL appears in a search result, it generates an impression. The user doesn't have to whorl down to see your search effect for the impression to count. When the user selects a link that takes them outside of Google Search, that counts every bit one click. If the user clicks a link, hits the dorsum button, then clicks the same link once more -- still one click. If then, they click a dissimilar link -- that's two clicks. When a user clicks a link inside Google Search that runs a new query, that'southward non counted as a click. Also, this doesn't include paid Google results. This is the mean ranking of your page(due south) for a query or queries. Suppose our guide to SEO tools is ranking #two for "SEO software" and #four for "keyword tools." The average position for this URL would exist 3 (bold we were ranking for literally nil else). CTR, or click-through rate, is equal to Clicks divided by Impressions, multiplied past 100. If our postal service shows upward in 20 searches, and generates 10 clicks, our CTR would be fifty%. GSC offers several different ways to view and parse your information. These filters are incredibly handy, simply they can also be confusing when you're familiarizing yourself with the tool. There are three search types: spider web, image, and video. I typically employ "web," since that's where well-nigh of the HubSpot Blog traffic comes from, but if you go a lot of visits from image and/or video search, make sure y'all adjust this filter accordingly. You can as well compare two types of traffic. Just click the "Compare" tab, cull the two categories you're interested in, and select "Use." This can lead to some interesting findings. For example, I discovered this colour theory 101 post is getting more impressions from image search than web (although the latter is still generating more clicks!). GSC now offers xvi months of information (upwards from 90 days). Yous tin can choose from a diverseness of pre-gear up fourth dimension periods or ready a custom range. Every bit with search type, you can too compare two date ranges in the "Compare" tab. Click "New" next to the Date filter to add up to five other types of filters: query, page, state, device, and search advent. These filters can be layered; for instance, if I wanted to see data for SEO-related queries appearing on mobile search, I'd add a filter for queries containing "SEO" on mobile devices. If I just wanted to limit the results even further to posts on the Marketing Blog, I'd add some other filter for Pages containing the URL "blog.hubspot.com/marketing". You can become very specific hither -- I recommend playing effectually with different combinations of filters and so you see what'southward possible. The index coverage report shows yous the status of every folio Google has tried to index on your site. Using this report, you lot tin diagnose any indexing issues. Each page is assigned one of four statuses: In this expanse, you tin brand your sitemap available to Google and see its status. Can yous see why I love GSC? Let'due south dig into each use example. Annotation: It'due south useful to look at this in tandem with "Impressions" (check "Total impressions" to see this information side-by-side). A page might have high CTR but low impressions, or vice versa -- you won't get the full picture without both information points. I recommend keeping an centre on CTR. Any significant motion is significant: If it'southward dropped, merely impressions have gone up, yous're but ranking for more than keywords, then average CTR has declined. If CTR has increased, and impressions have decreased, you lot've lost keywords. If both CTR and impressions have gone up, congrats -- you lot're doing something right! Equally y'all create more content and optimize your existing pages, this number should increase. (Every bit always, there are exceptions -- maybe you lot decided to target a small number of high conversion keywords rather than a lot of boilerplate conversion ones, are focusing on other channels, etc.) Average position isn't that useful on a macro level. Most people are concerned when it goes upwards -- only that's shortsighted. If a page or gear up of pages starts ranking for boosted keywords, average position unremarkably increases; after all, unless you're ranking for the verbal same position or meliorate equally your existing keywords, your "average" will become bigger. Don't pay besides much attention to this metric. Because you're looking at boilerplate position by URL, that number is the mean of all of that folio's rankings. In other words, if it's ranking for 2 keywords, it might be #1 for a high-volume query and #43 for a low-book one -- simply the average will still exist 22. With that in listen, don't judge the success or failure of a page by "average position" solitary. Follow the same steps that yous would to identify your highest-ranking pages, except this time, toggle the pocket-sized up arrow side by side to "Position" to sort from highest (bad) to smallest (expert). At this point, y'all can look at the data in GSC, or export it. For an in-depth analysis, I highly recommend the second -- information technology'll brand your life much easier. To do then, click the downward arrow beneath "Search Appearance," and so download it every bit a CSV file or export it to Google Sheets. Later on you have this data in spreadsheet class, you can add a column for the position differences (Last 28 days Position - Previous 28 days Position), and so sort by size. If the difference is positive, your site has moved up for that query. If it'south negative, you've dropped. Knowing which queries bring in the about search traffic is definitely useful. Consider optimizing the ranking pages for conversion, periodically updating them and so they maintain their rankings, putting paid promotion behind them, using them to link to lower-ranked (but just equally if not more important) relevant pages, and so on. The total number of indexed pages on your site should typically go up over fourth dimension every bit you: If indexing errors go upward significantly, a change to your site template might be to blame (because a large set of pages accept been impacted at in one case). Alternatively, yous may have submitted a sitemap with URLs Google tin can't crawl (considering of 'noindex' directives, robots.txt, countersign-protected pages, etc.). If the total number of indexed pages on your site drops without a proportional increase in errors, it'due south possible y'all're blocking access to existing URLs. In any instance, try to diagnose the issue by looking at your excluded pages and looking for clues. Every backlink is a signal to Google that your content is trustworthy and useful. In general, the more backlinks the better! Of course, quality matters -- i link from a high-authority site is much more valuable than two links from low-authority sites. To meet which sites are linking to a specific page, simply double-click that URL in the written report. If you desire to help a page rank higher, adding a link from a page with a ton of backlinks is a expert bet. Those backlinks give that URL a lot of page authority -- which it can and then pass on to another page on your site with a link. Knowing your top referring domains is incredibly useful for promotion -- I'd recommend starting with these sites whenever yous practise a link-building campaign. (Only make certain to use a tool similar Moz, SEMrush, or Arel="noopener" target="_blank" hrefs to filter out the low-potency ones outset.) These may also be good candidates for comarketing campaigns or social media partnerships. Ballast text should be as descriptive and specific as possible -- and best instance scenario, include your keyword. If you find websites linking to your pages but using anchor text like "Click hither" "Larn more than", "Check it out", etc., consider sending an email asking them to update the hyperlink. Information technology'due south normal for some URLs to accept more inbound links. For instance, if y'all run an ecommerce site, every product page in your "Skirts" category will link back to the "Skirts" overview page. That's a good matter: Information technology tells Google your summit-level URLs are the almost important (which helps them rank college). However, a heavily skewed link distribution ratio isn't ideal. If a tiny percentage of your URLS are getting way more links than the rest, information technology'll exist hard for the 95% to receive search traffic -- y'all're not passing enough authority to them. Here's what a heavily skewed distribution looks like: The optimal spread looks like this: Use GSC'due south link data to learn how your links are distributed and if you need to focus on making your link distribution more smooth. Google recommends fixing errors before looking at the pages in the "Valid with warnings" category. By default, errors are ranked by severity, frequency, and whether you've addressed them. Here's how to interpret the results. If the URL is on Google, that means information technology's indexed and tin announced in search. That doesn't mean it volition -- if it's been marked as spam or you lot've removed or temporarily blocked the content, it won't announced. Google the URL; if it shows up, searchers can find it. Open up the Alphabetize coverage card to larn more virtually the URL's presence on Google, including which sitemaps point to this URL, the referring page that led Googlebot to this URL, the last time Googlebot crawled this URL, whether you lot've immune Googlebot to crawl this URL, whether Googlebot actually could fetch this URL, whether this page disallows indexing, the canonical URL you've set for this page, and the URL Google has selected as the canonical for this page. The Enhancements section gives yous data on: Editor'southward notation: This post was originally published in October 2018 and has been updated for comprehensiveness. What is Google Search Panel?

![→ Download Now: SEO Starter Pack [Free Kit]](https://no-cache.hubspot.com/cta/default/53/1d7211ac-7b1b-4405-b940-54b8acedb26e.png)

How to Add Your Website to Google Search Panel

Verifying Your Site on GSC

GSC Verification Methods

URL Versions: WWW Domain or Non?

GSC Users, Owners, and Permissions

Do Y'all Need a Sitemap?

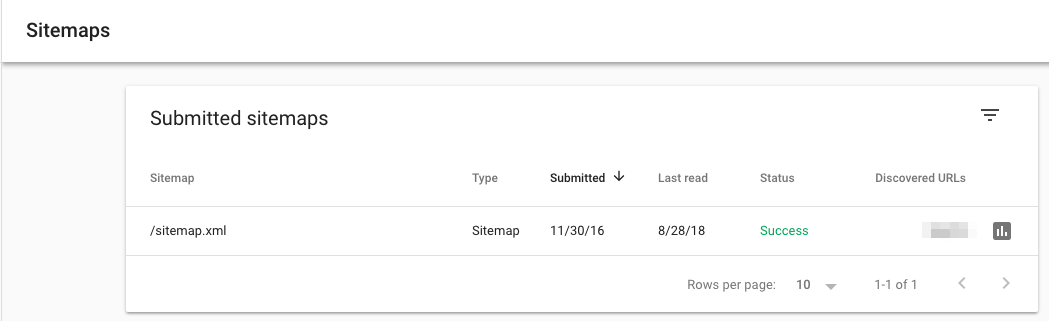

GSC Sitemaps Written report

GSC Dimensions and Metrics

What's a Google Search Console query?

What'due south an impression?

What's a click?

What's boilerplate position?

What's CTR?

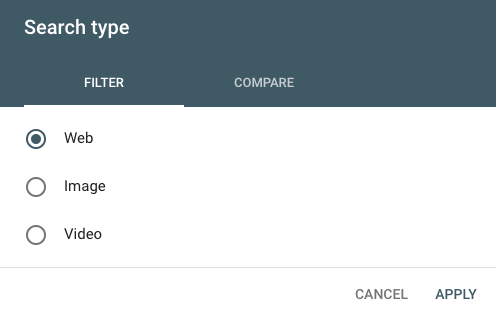

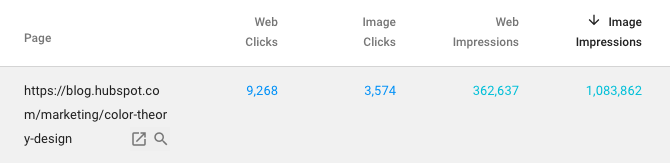

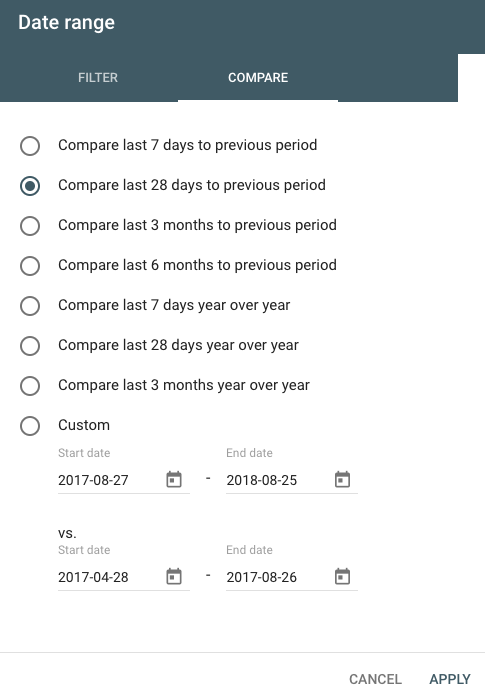

Filtering in Google Search Console

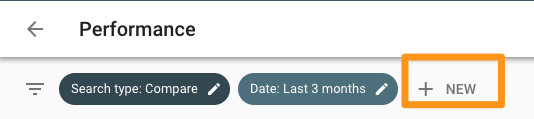

Search Type

Date Range

Queries, Page, Country, Device, Search Advent

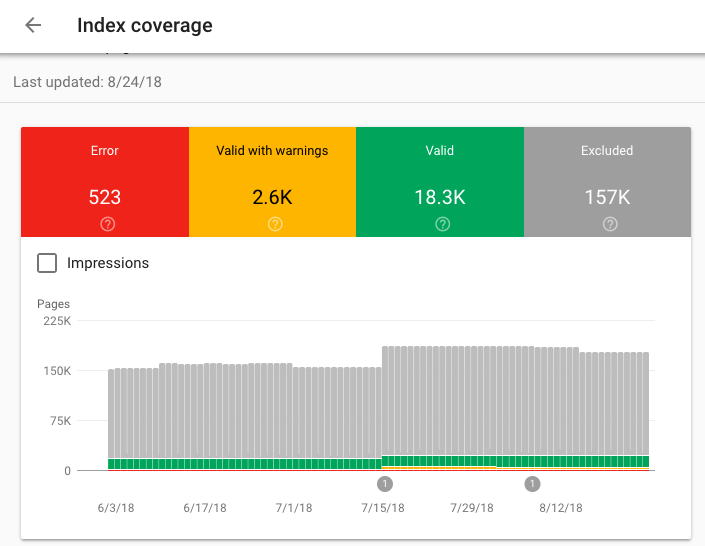

Index Coverage Study

Submitted Sitemaps

How to Use Google Search Panel

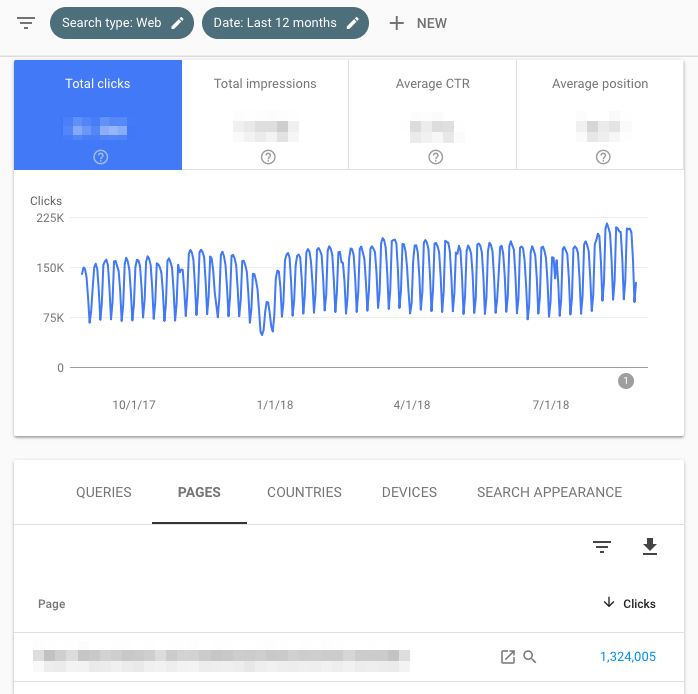

ane. Identify your highest-traffic pages.

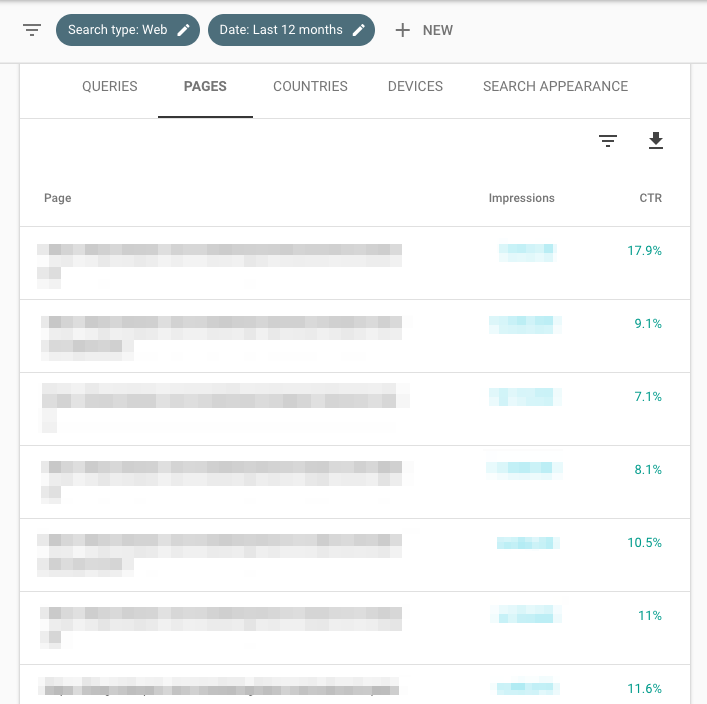

2. Place your highest-CTR queries.

3. Look at boilerplate CTR.

iv. Monitor your CTR over time.

5. Monitor your impressions over time.

6. Monitor average position over time.

7. Identify your highest-ranking pages.

viii. Identify your everyman-ranking pages

9. Identify ranking increases and decreases.

10. Place your highest-traffic queries.

11. Compare your site's search functioning across desktop, mobile, and tablet.

12. Compare your site'south search performance across different countries.

xiii. Learn how many of your pages have been indexed.

14. Learn which pages haven't been indexed and why.

fifteen. Monitor total number of indexed pages and indexing errors.

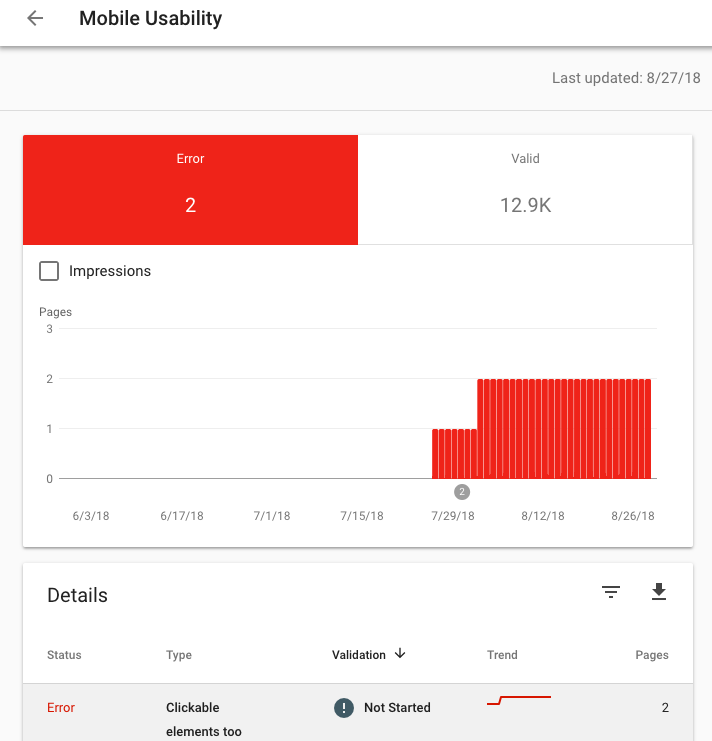

xvi. Place mobile usability bug.

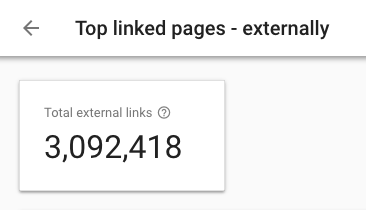

17. Acquire how many total backlinks your site has.

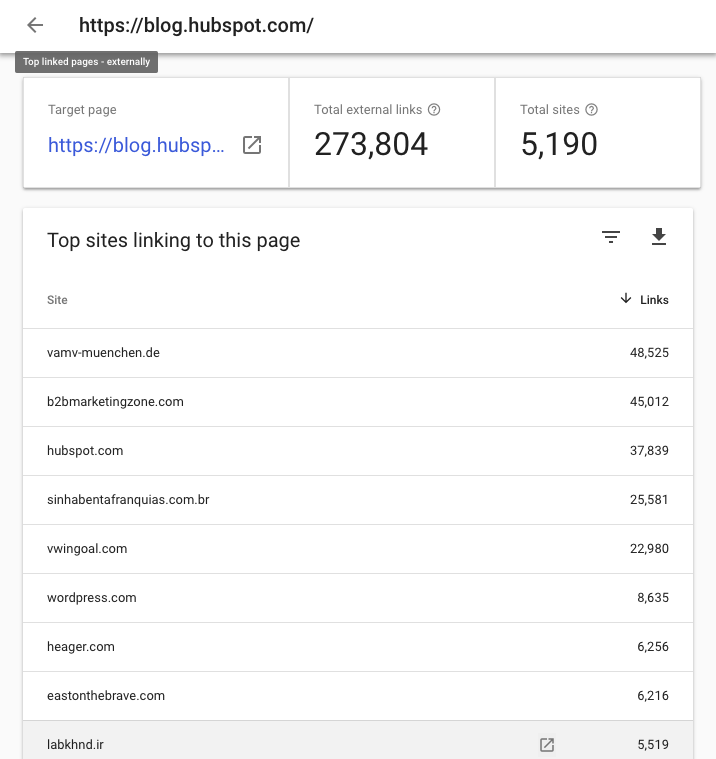

18. Identify which URLs have the most backlinks.

xix. Place which sites link to y'all the about.

xx. Identify the most popular anchor text for external links.

21. Identify which pages have the near internal links.

22. Learn how many total internal links your site has.

23. Notice and prepare AMP errors.

24. Run into Google how Google views a URL.

Originally published Feb 25, 2021 vii:00:00 AM, updated February 25 2021

Source: https://blog.hubspot.com/marketing/google-search-console

Posted by: baronmoreary.blogspot.com

0 Response to "How To Upload A Website Using Google Search Console For Seo"

Post a Comment